Multimodal LLMs for Computer Vision Tasks

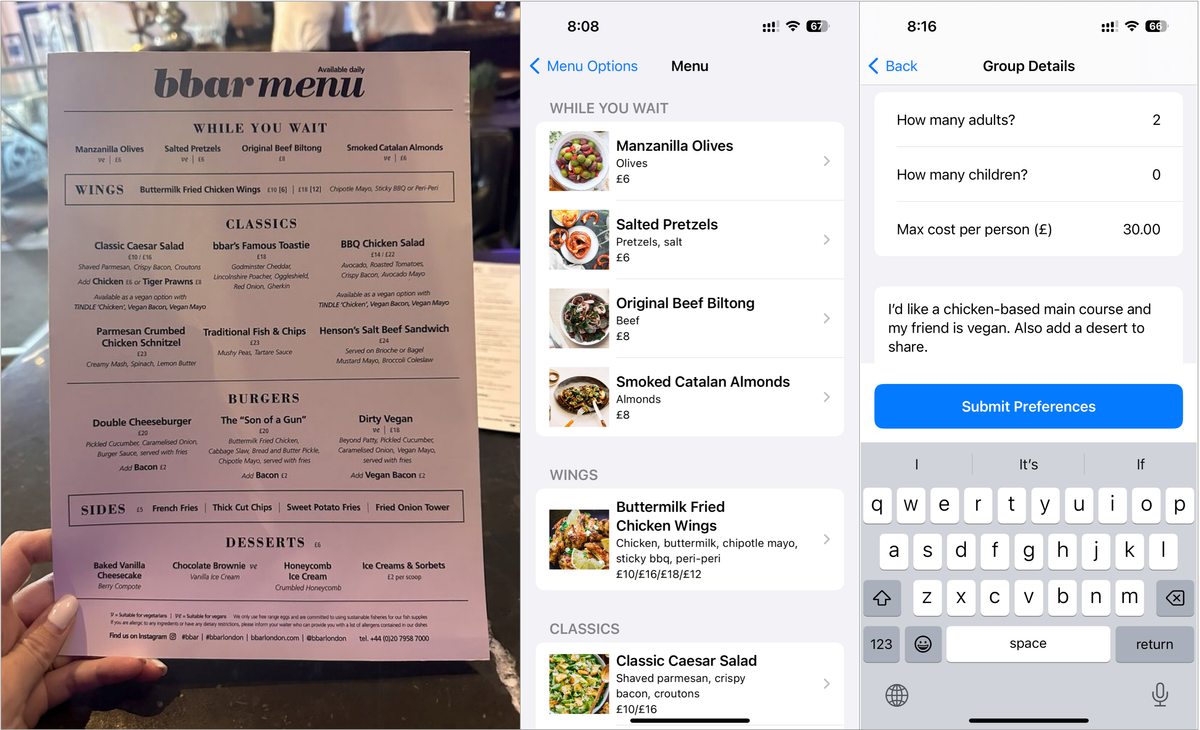

An MLLM-powered application for translating, visualizing, and selecting menu items

Video Overview

Github repo: https://github.com/yuzom/menu-lens

Frontend: contact me for beta access to the app

Introduction

Have you ever had trouble deciding what to order at a restaurant? I definitely have, especially at Italian, French, and other international restaurants. The issue is that menus usually have too many choices, don't have pictures of the dish, and are sometimes in a language you don't understand.

For my computer science final project at Queen Mary, I created a full-stack app that translates, visualizes, and selects menu items for you. I created the frontend in Swift and the backend in Python using the FastAPI framework and PostgreSQL.

Research

Menus have too many items. Johns et al (2013) showed that the optimal number of menu choices is actually between 6 to 10. But some restaurants list literally hundreds of items on their menus. Furthermore, numerous studies show that too many choices leads to bad consumer decisions:

- A decreased likelihood of satisfaction with their decisions (Botti & Iyengar, 2004).

- Reduced confidence in having selected the best option (Haynes, 2009).

- And a greater tendency to experience post-decision regret (Inbar et al., 2011).

People understand images better. Lee & Kim (2020) showed that video and picture menus elicit the highest level of mental imagery while conventional menus rank the lowest. Studies also show that illustrations combined with text results in 36% higher learning score compared to text alone (Levie & Lentz, 1982).

Most native English speakers need translation. Given that only 35% of the UK population aged 25-64 reported knowing one or more foreign languages (European Commission, 2016), it's probable that some travelers will encounter menus written in a language they cannot understand.

System Design

Frontend. I used Swift to code my frontend. The UI buttons and information are distributed across 20 different views to compensate for the limited screen real estate available on phones. I used SwiftUI for the framework and distributed the frontend through Apple's beta testing program TestFlight.

Backend. I used Python to code my backend. FastAPI was chosen as the API framework because of its ease of use and high performance through its natively supported asynchronous capability.

Database. I used PostgreSQL for my database. It's hosted on the same server my Python code lives on. SQLAlchemy is used for Object Relational Mapping (ORM).

External APIs. OpenAI’s GPT-4o is used for its advanced image processing and natural language processing capabilities. Google Image Search API is also used to fetch image URLs, enabling the display of a wide range of high quality images without directly hosting images.

Security Features. Since I implemented my full-stack application from the ground up without using PaaS tools like Firebase. This meant I needed to implement several security features on my own. Oauth2 with JSON Web Tokens (JWT) handles secure user authentication, while user passwords are hashed using Bcrypt’s SHA-256 and never stored as plaintext. Secret information like API keys is stored securely in a .env file, which is protected from being shared online. Swift’s App Transport Security (ATS) ensures all iOS app connections use HTTPS, and an NGINX server with SSL/TLS encryption enforces secure communication. Firewalls on the Linux server restrict connections to HTTP, HTTPS, and SSH only.

Results

The app received generally positive feedback from my classmates and friends who tried it out on TestFlight. “I found the menu conversion and menu viewing easy and intuitive to use,” received an average rating of 4.5/5. “The digital menu gave me the information I desired,” received an average rating of 4.6/5.

Closing Remarks

At the beginning of this project, I set out with three goals in mind: 1. Complete a working and sufficiently complex MSc project, 2. Create software that I would actually use, and 3. Use languages and frameworks that are actually used in industry.

| Previous computer science experience | Newly learned experience |

|---|---|

| C programming / data structures | FastAPI / Postman, HTTP methods |

| MATLAB / Simulink, computer vision toolkit | Security protocols / JWT, Oauth2, Bcrypt |

| Assembly programming | PostgreSQL / SQLAlchemy, Alembic |

| Python basics | Swift / UIKit, SwiftUI |

| Python for data analysis | Linux server deployment |

| Oracle SQL / databases | |

| Security basics | |

| Interactive system design theory |

A key strength of this project was my opportunity to learn full-stack development, an area in which I had limited prior exposure. My computing-related studies as a mechanical engineer were primarily focused on embedded systems and scientific computing, with minimal emphasis on web frameworks or user interface design.